What is Big Data? All the information that is gathered from variety of sources such as blog, social media, email, sensor, photographs, video that are unstructured and huge count as big data. All the enterprise businesses, vendors, clients need to have servers to store these data, need to have a means to analyze these data for their business purposes. The main reason behind all of these data is the revolution that social media brought to the table and as a result there are many new types of data sources. Well, having new data sources is one thing and running web applications on so many different types of devices like iPads, iPhones, and android devices is another cause of creating Big Data, as it gives the comfort of updating and posting data all the time to users, everywhere they are. The amount of big data does not fit with the relational database products. Big Data are recognized as VVV which stands for Volume, Variety and Velocity. Meaning that the volume is large and type of the data is versatile, and the incoming data needs quick analysis and decision making.

First the Big Data had an impact on companies like Google, Yahoo and similar type of companies in IT world. Then it reached to other industries like Engineering, Manufacturing, Healthcare and all other industries. So what is the solution and how to handle this amount of the data in a way to get fit in any business? Most companies are using technologies like Hadoop, or Cassandra to analyze their data and integrate it with traditional enterprise data in the warehouse.

So what is Hadoop? What is Cassandra?! How are they different? And how each of them is useful? Hadoop is an open source, it is for highly parallel intensive data analytics, it supports MapReduce which is a framework for distributed processing of large data set on clusters.

Yahoo originally developed Hadoop as a clone of Google’s MapReduce infrastructure but hadoop takes care of running the code across a cluster of machines. It responsibilities include chunking up the input data, sending it to each machine, running the code on each chunk, checking that the code ran, passing any results either on to further processing stages or to the final output location, performing the sort that occurs between the map and reduce stages and sending each chunk of that sorted data to the right machine, and writing debugging information on each job’s progress, among other things. A typical hadoop instance contains three main modules: hadoop common, hadoop distributed file system, and the MapReduce Engine.

Hadoop Common is the underlying framework layer of Hadoop. The Hadoop Common package contains the utilities, scripts, and libraries necessary to start Hadoop. Hadoop Common requires JRE (Java Runtime Environment) 1.6 to run; however applications written for Hadoop to execute can be written in other programming languages such as C++.

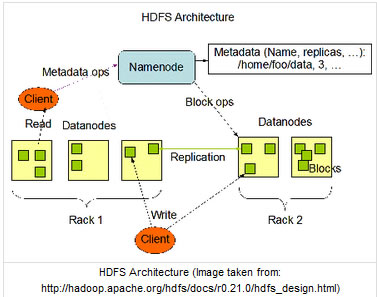

HDFS is Hadoop’s Distributed File System is designed to reliably store very large files across machines in a large cluster. The primary components of the HDFS are the Name Node, a Secondary Name Node, and Data Nodes. The Name Node is a single, unique server that keeps the directory structure of and coordinates all file system operations. Data Nodes contain the servers that house the data. The Hadoop Distributed File System is a rack-aware file system – meaning the Name Node knows exactly what rack (or more specifically, network switch) a data node is stored in. This performance method limits most of the large data transfer to a single rack instead of across the network outside the rack switch. With the default replication value of 3, data is stored on three data nodes: two on the same rack, and one on a different rack. The HDFS is self-healing. Data nodes can talk to each other to rebalance data, to move copies around, and to keep the replication of data high. While the HDFS approach allows for high redundancy, it cannot be considered highly available due to the single point of failure – one Name Node. This brings us to the third component of the HDFS, the Secondary Name Node. The Secondary Name Node keeps copies of the most recent versions of the Name Node in case of failure. The Secondary Name Node will not take over in place of the Name Node if the Name Node goes down, but they will provide the Name Node with the directory structure and any incomplete operations for the Name Node to rebuild itself with when it comes back online.

The MapReduce Engine consists of one Job Tracker and Task Trackers assigned to every Node. Applications submit jobs to the Job Tracker and the Job Tracker pushes the jobs to the Task Trackers closest the data. In a rack-aware file system such as the HDFS, the Job Tracker knows which node (and rack) the data is located – keeping the work close to the data. Again, like HDFS, this reduces network traffic on the main backbone network. The Task Tracker on each node creates a separate Java Virtual Machine process to prevent the Task Tracker itself from failing if the running job crashes the JVM.

MapReduce paradigm consists of three steps. First, you write a mapper function or script that goes through your input data and outputs a series of keys and values to use in calculating the results. The keys are used to cluster together bits of data that will be needed to calculate a single output result. The unordered list of keys and values is then put through a sort step that ensures that all the fragments that have the same key are next to one another in the file. The reducer stage then goes through the sorted output and receives all of the values that have the same key in a contiguous block. Cassandra is also an open source and is highly scalable for distributed databases it is known for “NoSQL” operational data management. Cassandra is a distributed key/value system, with highly structured values that are held in a hierarchy similar to the classic database/table levels.

NoSQL is next generation databases that are not relational but they are horizontally scalable to hundreds of nodes with high availability and transparent load balancing.

Almost every day, there is a new data store is made with some annoying name and then there is a question in the mind of thoughtful developers and architects, which one to use?!!!

Here is the list of some of the NoSQL Databases and how they categorized:

|

List of NoSQL Databases |

||||||

| Core NoSQL Systems | Document Store | Key Value / Tuple Store | Multimodel Databases | Object Databases | Grid & Cloud Database | XML Databases |

| Hadoop/ HBase | MongoDB | DynamoDB | OrientDB | Db4o | Giga Spaces | Mark Logic Server |

| Cassandra | CouchDB | Azure Table Storage | AlchemyDB | Veresant | Queplix | EMC document xDB |

| Hypertable | RavenDB | MEMBASE | Objectivity | Hazelcast | eXist | |

| Amazon Simple DB | Citrusleaf | Riak | Starcounter | Joafip | Sedna | |

| Cloudata | Clusterpoint Server | Redis | Perst | BaseX | ||

| Cloudera | ThruDB | LevelIDB | ZODB | Qizx | ||

| SciDB | Terrastore | Chordless | Magma | Berkely DB XML | ||

| Stratosphere | SisoDB | GenieDB | NEO | |||

| SDB | Scalaris | PicoLisp | ||||

| Tokyo Cabinet/Tyrant | Siagodb | |||||

| GTM | Sterling | |||||

| Scalien | Morantex | |||||

| Berkeley DB | EyeDB | |||||

| Voldemort | HSS Database | |||||

| Dynomite | FramerD | |||||

| KAI | ||||||

| HamsterDB | ||||||

| STSdb | ||||||

| Tarantool/Box | ||||||

| Maxtable | ||||||

| Pincaster | ||||||

| RaptorDB | ||||||

| TIBCO Active Spaces | ||||||

| Allegro-C | ||||||

| nessDB | ||||||

| HyperDex | ||||||

| Mnesia | ||||||

| LightCloud | ||||||

| Hibari | ||||||

| Neo4J | ||||||

| Infinite Graph | ||||||

| Snoes | ||||||

| InfoGrid | ||||||

| HyperGraphDB | ||||||

| Trinity | ||||||

| DEX | ||||||

| AllegroGraph | ||||||

| BrightstarDB | ||||||

| Bigdata | ||||||

| Meronymy | ||||||

| OpenLink Virtuoso | ||||||

| VertexDB | ||||||

| FlcokDB | ||||||

As you see there are lots of key/value stores, the memcached system gives programmers the power of treating a data store like array, reading and writing values purely on a unique key. And it leads to a very simple interface, with three primitive operations to get the data associated with a particular key, to sore some data against a key, and to delete a key and its data. So you see lots of them.

The advantages of NoSQL data stores are:

- elastic scaling meaning that they scale up transparently by adding a new node, and they are usually designed with low-cost hardware in mind.

- NoSQL data stores can handle Big data easily

- NoSQL databases are designed to have less management, automatic repair, data distribution, and simpler data models therefore no need to have a DBA on site for using it.

- NoSQL databases use clusters of cheap servers to manage the exploding data and transaction volumes and therefore they are cheap in comparison to the high cost of licenses of RDBMS systems.

- Flexible data models, the key value stores and document databases schema changes don’t have to be managed as on complicated change unit, therefore it lets application to iterate faster.

There are some parallel efforts going on to Hadoop getting benefit of mapreduce and hadoop, like Hive and Pig, Cascading, mrjob,Caffeine, S4, MapR, Acunu, Flum, Kafka, Azkaban,Greenplum, which I am not going to bore you with all, just Hive and Pig.

With Hive, you can program hadoop jobs using SQL. It is a great interface for anyone coming from relational databases background. Hive does offer the ability to plug in custom code for situations that don’t fit into SQL, as well as a lot of tools for handling input and output. To use it, you set up structured tables that describe your input and output, issue load commands to ingest your files, and then write your queries as you would in any other relational database. Do be aware, though, that because of Hadoop’s focus on large-scale processing, the latency may mean that even simple jobs take minutes to complete, so it’s not a substitute for a real-time transactional database.

The Apache Pig project is a procedural data processing language designed for Hadoop. In contrast to Hive’s approach of writing logic-driven queries, with Pig you specify a series of steps to perform on the data. It’s closer to an everyday scripting language, but with a specialized set of functions that help with common data processing problems. It’s easy to break text up into component ngrams, for example, and then count up how often each occurs. Other frequently used operations, such as filters and joins, are also supported. Pig is typically used when your problem (or your inclination) fits with a procedural approach, but you need to do typical data processing operations, rather than general purpose calculations. Pig has been described as “the duct tape of Big Data” for its usefulness there, and it is often combined with custom streaming code written in a scripting language for more general operations.

Hope this blog answered some of the questions about the Big data and NoSQL, as this concept is the future of web development and web business applications in all industries.